As artifical intelligence increasingly takes over tasks, our latest expert-led SGS Live discussion looks at the important subject of legal accountability.

As technology advances, artificial intelligence (AI) is taking over more and more tasks seemingly independently. But the use of AI also poses risks. For example, an intelligent algorithm might discriminate against people, and intelligent robots (including self-driving cars) can cause major harm. Thus, the question of who is responsible in the event of damages or accidents arises and legislators as well as other stakeholders all around the world are engaged in proposing and creating regulations for AI.

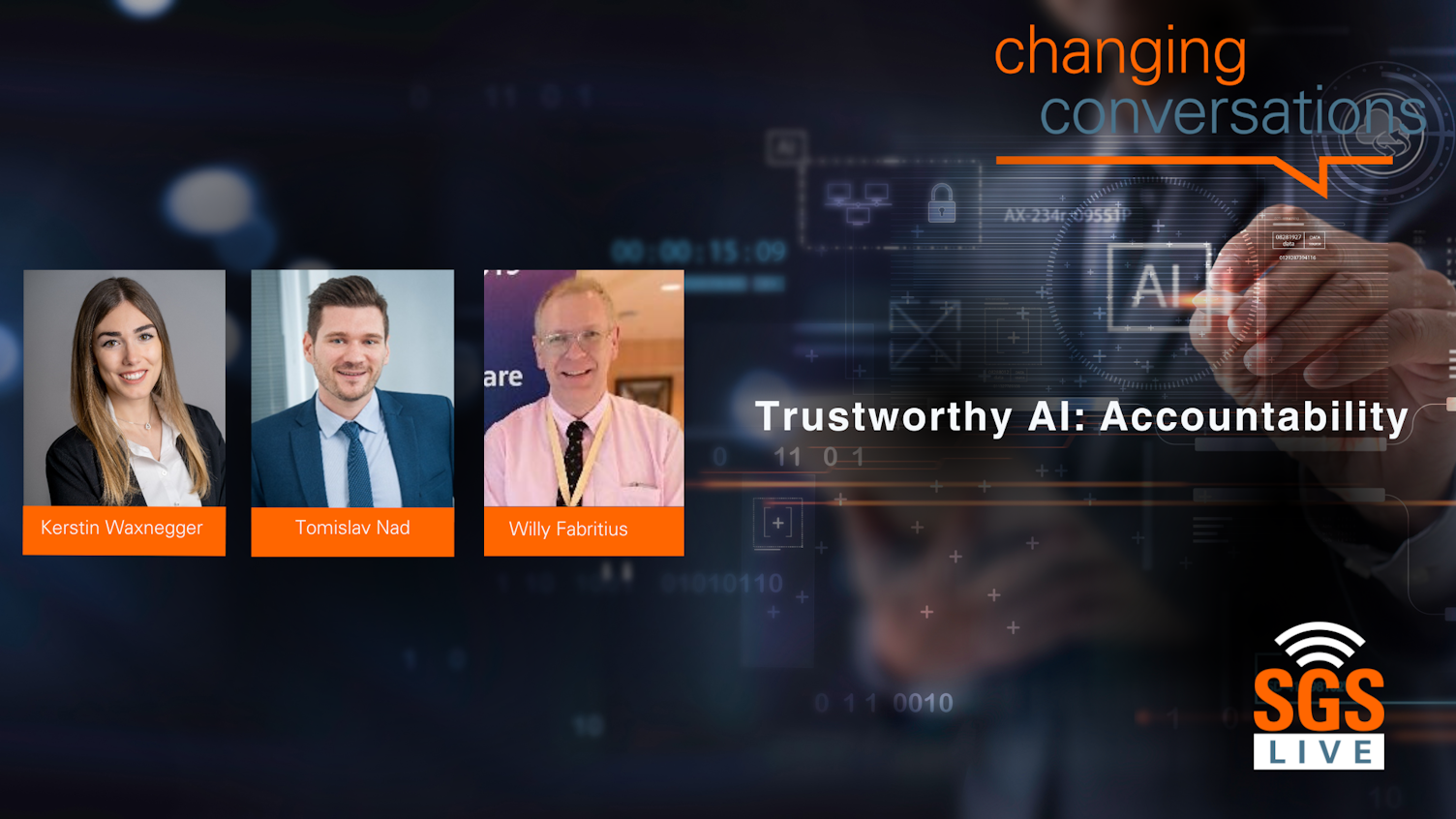

SGS experts Willy Fabritius (Global Head of Strategy & Business Development Information Security) and Tomislav Nad (Lead Innovation Technologist) were joined by Kerstin Waxnegger, a legal expert and data protection officer at Know-Center GmbH, in our latest SGS Live to discuss the question of legal accountability in AI. Also, they shared an overview of the most important AI regulations and regulatory initiatives as well as AI certification.

Watch the video now

About our “Trustworthy AI: current areas of research and challenges” series

The need for trustworthy AI systems is recognized by many organizations, from governments to industries and academia. As AI systems become more widely used by both organizations and individuals, it is important to establish trust in them. To establish this trust, numerous white papers, proposals and standards have been published and are still in development to educate organizations on the need for and uses of AI systems. Join us for our series as our experts discuss a variety of topics related to building trust and understanding of AI systems.

About SGS

We are SGS – the world’s leading testing, inspection and certification company. We are recognized as the global benchmark for sustainability, quality and integrity. Our 99,600 employees operate a network of 2,600 offices and laboratories around the world.

38, Calea Serban Voda,

040212,

Bucharest, Romania